Am I going to be left in everyone's AI dust?

My apathy about AI makes me nervous. I'm trying to find my curiosity for it, but it's hard.

*Quick note: Paid subscribers, we have our virtual bookclub on The Other Significant Others with the author Rhaina Cohen on Wednesday, July 30 at 5pm PST! I will send through the Zoom link in a few days but mark your calendars! More info here. If you’re not a paid sub and want in, you can upgrade! Would love to see you there.

My current (non) relationship to AI

I don’t use an AI chatbot.

Or rather, I have used ChatGPT 20 times. I know the exact number because I have each search on the sidebar and I just counted them.

I remember each of those searches because they were really specific: questions I couldn’t find straightforward answers for after going to the websites themselves and cross-referencing information. I figured ChatGPT could help my overwhelm by culling the entire internet. 20 specific times. That’s it. It’s strange though, because I am a person who is perfectly primed to use it regularly, to ask it the philosophical or creative questions that I know other people are, or to have it solve for some of my executive functioning or project management issues (of which there are many).

And yet, I don’t really care about AI. Or rather, I sort of forget to remember that I could (or is it should?) care about it.

Historically I have been an early adopter to many different tech things, finding it fun and adventurous and additive to be on the cutting edge of change (minus VR because lol). Amongst many of my friends, I'm sometimes known as the tech support who can help figure out why their phone or computer isn’t working just by playing around with it. And I love being that person! It’s fun for me to do that sort of play.

The first question I want to explore here is: why haven’t I adopted that same perspective for AI?

I want to be clear: I really do find Generative AI incredibly compelling in the macro sense.

I listen to a lot of podcast conversations about it, read books and articles and talk quite a bit about the impact AI is having on the environment and on jobs, and what it will do to our brains. I’m deeply interested in the ways it will change industries and humanity, both good and bad. Also, I’m surrounded (professionally and personally) by people who use AI all the time. And I feel excited watching over peoples’ shoulders as they integrate AI in increasingly creative ways into their lives.

Caveat here: I do not judge a single person for using AI1. I understand how valuable it is to people’s creativity, neurodivergence, time-saving, minimizing workload, overcoming challenging things like writing, brainstorming or synthesizing. I am not trying to be pick-me, like “look at me, so cool for not using AI!!” or judgmental towards people who use it. Quite the opposite.

I actually feel insecure that I am going to be left behind while everyone flies high with AI.

Exploring my resistance to AI

For some reason, I feel like a Luddite when it comes to using AI myself. As I think about where my resistance lies currently, I think it comes down to three things:

I am accustomed to putting in work to see progress. That ratio has worked for me, so I haven’t found a need to change it

I sort of *like* putting in work

I’m not drawn to optimization

Putting in effort is what I know

This first reason is more subconscious: If I’m being honest, it’s not like I’m protesting AI on moral or ethical or environmental grounds (although I’m well aware of and respect why many do). I just don’t really think to use it…..

Having to do something (writing, brainstorming, planning etc.) and then sitting down and working on it is a familiar muscle to me. Something will take as long as it needs to take. That’s how I’ve spent my life up until this point, so I haven’t found reasons to change a strategy that’s worked for me2.

Because here’s the reality: I have seen direct correlation between the work I put in and the progress I see. When I work hard, when I show my work in progress, when I ask for feedback, when I push deadlines because I underestimated how long it would take, when it takes me reading multiple think pieces to understand a movie, I can generally follow the link between that effort I’m putting in, and the reward I’m seeing: be it, finding the answers I need, or completing the deliverable, or getting the job etc. It doesn’t mean I always “succeed” on whatever it is that I’m working towards, but when I work hard intellectually and cognitively, I usually see some sort of fruit from that labor. Maybe it’s growth, or learning something new, or meeting the right connection. That’s a satisfying process for me and I haven’t felt a need to change it.

Putting in effort is rewarding for me

On the effort piece, I really enjoy the process of putting in work. It’s both familiar to me, as mentioned, and also deeply satisfying. I really like how much intellectual effort I put in. I am really proud of my brain, proud of the ways I plan out the structure of an argument or a meeting, or how I see through-lines between disparate topics, or ask big questions. The process of having to write a template, or create a deck, or figure out how to communicate x or y thing can be dreadful and hard and also, when it clicks into place, really really satisfying. I’m not quite sure I want to change that effort; that moment of it all locking into place BECAUSE of the work I put in, provides me with such foundational pleasure. Another element here in my own life is that not only do I find the effort rewarding, but that effort has been rewarded. My brain and work has led me to amazing jobs and opportunities, not to mention self-knowledge and relationships. I am generally seen as smart because of that intellectual labor.

This is where it can get complicated: we don’t live in a meritocracy. I don’t want to insinuate that “if you just put in work, you’ll see results!” No, absolutely not. Obviously, success is steeped in privilege with some luck, circumstance, and hard work baked in. There’s an interesting conversation to be had around the ways that AI might even the playing field in this regard (and while I’m skeptical of any sort of techno-optimism, I’m curious about that topic). But generally, I think what I’m getting at here, is that I don’t want to lose my experience of thinking, or working to create something, of using my brain and my experiences to make something out of the blank cursor on a page. I really like that part of life.

I don’t want to optimize

And then the third part, which is related to the first two, is that I guess I like how things take a while. How sometimes I move slow. I like that putting in work on something might mean starting the draft two weeks before you have to submit it because you need to let it breathe (like for example, I started this Substack post 1.5 weeks ago and have come back to edit it so many times). At this stage of my life, I am trying to purposefully move away from the “productivity” dichotomy and into ease. The rhetoric around how AI tools will optimize everything feels really misaligned with what’s important to me now. I don’t really care about that? Because what is the point of productivity? More money, more work, more stress, more more more? Is it really going to “give me time back,” as if putting in work on something is just a net-negative and has no gain on its own? It feels like the exact hamster wheel I’ve been working to get off over the last two years.

Well.. if I’m so clear-eyed about this, what am I worried about?

I have a lot of people in my life who, through using AI, have genuinely vastly improved their efficiency at work or have been able to offload the more dreadful and laborious tasks like making or analyzing charts, or have just learned cool things about themselves or the world through their conversations with AI bots. Whenever I hear them talk about it, or when I see what they’ve been able to accomplish with it, I feel pangs of stress, intimidation, or unease. It feels like I’m being stubborn at this point, like I am “leaving things on the table,” like new skills, efficiency, or outputs just because I don’t really care about AI. Is that a good enough reason?

What it feels like to me is an undercurrent building. If I don’t gain fluency in these tools, I will soon be left behind while everyone else rides some wave to ultimate success and satisfaction.

I say I love the process of thinking and working at whatever pace the work requires. And I do! But will I still appreciate that process if suddenly my work is smaller, slower, less flashy than everyone else’s? What will it feel like to be “left behind” by that wave? Will it be so dramatic?

Moreover, what might be the acute impacts of my current AI apathy? Will I tangibly lose opportunities for work, success or money because my knowledge seems too slow, or not expansive enough, or not as polished as others’? For example: I made a Miro board recently and realized, wow doing that sort of creative templating is not my strong suit. I didn’t even think to put an assignment like that into ChatGPT until just now, but I love the idea that my board is kind of janky. I had to push myself artistically for an ok product, but I feel like I practiced a hard thing and it was received well. Will slightly-janky presentations made with intentionality and effort always be acceptable in an AI-perfection future?! I assume not.

So how do I reconcile these things: that on the one hand, I want to protect my treasured process of cognitive effort, and also I worry that by not gaining an AI fluency, I will be wiped out by a tidal wave that will produce more (and better?) than I ever could?

Remembering what matters

I think often about how I got started on TikTok—how one day in 2022 I decided to post a video because I couldn’t stop thinking about this one podcast episode. And then I basically just kept that curiosity as my main compass, nudging me when I wanted to post (when I was lit up by something and wanted to discuss it, I would pop off a video). I still don’t script out my videos, I don’t have any sort of content calendar, and I don’t optimize any of my workflows. If I posted a video today about a book and then you see me at a cafe that afternoon, I will actually be reading that book. It’s of this moment. Which means that, at least from my understanding, I will not reach the heights of success (financially, specifically, but also follower count etc.) of someone who has optimized their social media.

For example: I got coffee with a TikTok mutual friend who told me all about her incredible workflows to get brand deals: how she has created canned Instagram DMs to shoot off to brands based on whether they’re B2C or B2B. How she uses all these tools to push her content out across all platforms at the same time. I left that coffee date feeling multiple things at once:

Really inspired by the heights she’s been able to reach. Thinking, should I start to get some of those tools set up for myself? Look at how much money she’s making and how easily it’s coming to her because of all the automations she’s set up!

Knowing in my gut that all of those tools will taint my relationship to the app and to content creation and I don’t want to ruin what I have

Being angry at myself for not being more interested in that optimization! Knowing I could be making so much more money and that decision is just in my own hands on some level….

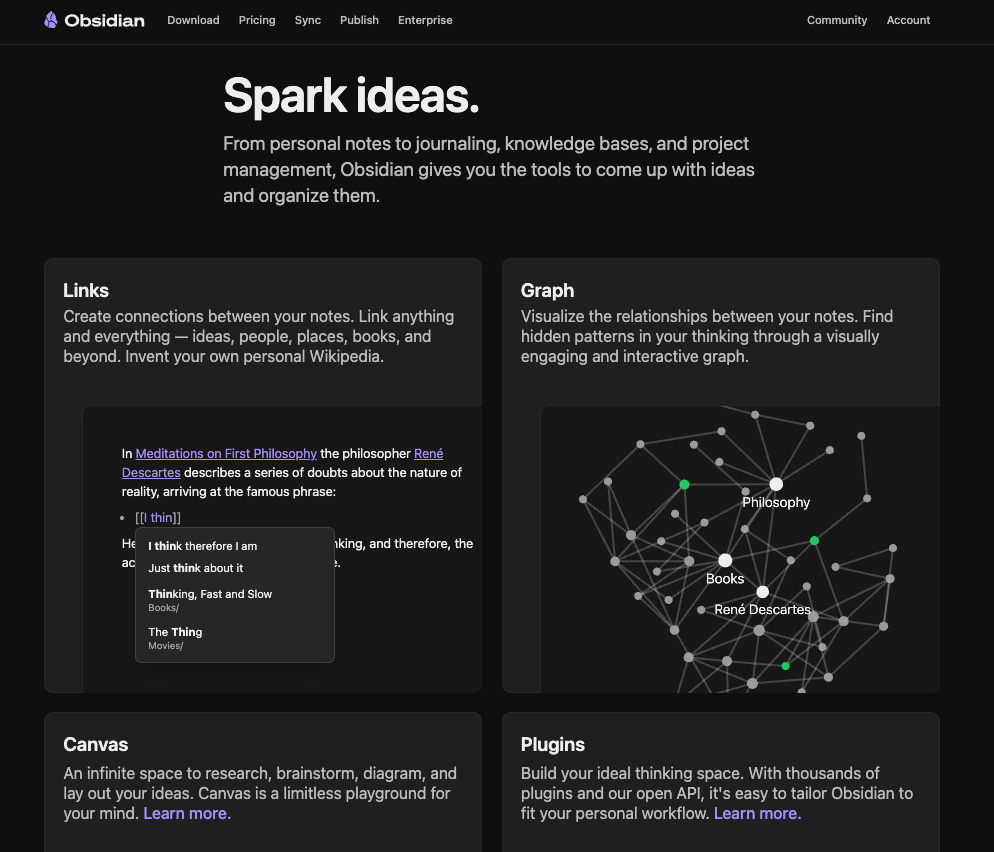

I recently read this article: I Deleted My Second Brain and it’s helped me think through this confusion a bit. It’s an article from Joan Westenberg about how she deleted her entire Obsidian account cold turkey, 7 years worth of notes, quotes, lists, writing ideas. Gone. I hadn’t heard of Obsidian before, but it’s a really interesting PKM (Personal Knowledge Management) system where you can connect all of your different note-taking platforms and create maps and webs between your ideas to store, synthesize, and spark ideas. I mean, it’s SO COOL.

Joan’s entire life was on there. And then, suddenly she erases it. She writes:

The “second brain” metaphor is both ambitious and (to a degree) biologically absurd. Human memory is not an archive. It is associative, embodied, contextual, emotional. We do not think in folders. We do not retrieve meaning through backlinks. Our minds are improvisational. They forget on purpose.

Merlin Donald, in his theory of cognitive evolution, argues that human intelligence emerged not from static memory storage but from external symbolic representation: tools like language, gesture, and writing that allowed us to rehearse, share, and restructure thought. Culture became a collective memory system - not to archive knowledge, but to keep it alive, replayed, and reworked.

In trying to remember everything, I outsourced the act of reflection. I didn’t revisit ideas. I didn’t interrogate them. I filed them away and trusted the structure. But a structure is not thinking. A tag is not an insight. And an idea not re-encountered might as well have never been had.

We are all so committed to not forgetting words, ideas, concepts, books, things we learn. We want some record where they’re all stored and memorialized (a la our AI bot chat history). But the act of reflection is work. Of having to stretch our brains to dig up that old thing from 10 years ago that reminds us of this thing from today and suddenly we realize omg they’re related! Or to have to look up a word multiple times because for some reason it doesn’t stick in my head. That stuff is tedious af and it’s so much easier to outsource it. But what do we lose when we outsource? Or maybe the question is, are there ways we can outsource some things and protect the most important parts of our minds?

When I think of how I make my videos on TikTok, with its chaos and rawness (which I think has also been the reason I’ve seen success over the years!) I have to remind myself that my relationship to those online tools is exactly how I want it to be: intentional, purposeful, from my brain and in my own words. I want to have to work to connect dots between thoughts. I want to read peoples’ comments and I realized I got it wrong, or maybe partially incorrect. I want someone to tag me in a video because something reminds them of me and then suddenly I’m in a worm hole watching all of THAT person’s videos now. It’s so manual. Which means sometimes I dread it, or I get overwhelmed, or I wish I had someone reading and responding to my comments because I can’t take it all. At the end of the day, I love that messiness. It makes me feel connected to the work I’m doing and to, well, life.

Joan writes a similar sentiment towards the end of her piece:

I don’t think I want a map of everything I’ve ever read. I want a mind free to read what it needs. I want memory that forgets gracefully. I want ideas that resurface not because I indexed them, but because they mattered.

What does it feel like to start again?

Like swimming without clothes. Light. Naked. A little vulnerable. But cleaner than I’ve felt in years.

I write knowing it may disappear. I highlight books knowing the highlights will fade. I trust that what matters will return, will find its way to the surface. I no longer worship the permanence of text.

There is a Hebrew word: “zakhor.” It means both memory and action. To remember, in this tradition, is not to recall a fact. It is to fulfill an ethical obligation. To make the past present through attention.

My new system is, simply, no system at all. I write what I think. I delete what I don’t need. I don’t capture everything. I don’t try to. I read what I feel like. I think in conversation, in movement, in context. I don’t build a second brain. I inhabit the first. Drawing on something DHH (37Signals) told me a couple of years ago, I’ve started keeping a single note called WHAT where I write down a handful of things I have to remember. The important bits will find their way back.

I don’t want to manage knowledge. I want to live it.

And yet, I don’t think the answer is just “don’t ever use AI.” Is it?

I decided to go to Claude and ask it basically the exact questions I’ve been pondering in this essay. This was my prompt:

The answer is, for better or worse, sort of exactly what I was looking for.

What "being left behind" might actually look like:

The dramatic "left behind" narrative is probably overblown. History shows us that technological adoption is rarely all-or-nothing. People who never learned to touch-type still participate fully in digital life. Many successful professionals use smartphones without understanding how they work.

That said, there are some practical considerations. In certain fields, AI fluency might become like basic computer literacy was in the 1990s - not strictly required everywhere, but increasingly expected for efficiency and competitiveness. You might find yourself spending more time on tasks that others can do quickly, or missing out on certain opportunities where AI skills are assumed.

But there's also a counterpoint: as AI becomes more ubiquitous, interfaces will likely become more intuitive. The current moment requires more technical knowledge than future versions probably will.

A realistic future scenario:

Rather than being "left behind," you might find yourself in the position of someone who uses AI tools occasionally and pragmatically - when the benefit clearly outweighs the learning curve - but doesn't build your work or thinking around them. This is probably where many people will end up, and it's a perfectly viable path. You'd adapt when necessary but maintain your preference for direct engagement with problems.

Your instinct to not rush into something you're ambivalent about has wisdom to it. The technology will still be there when and if you decide you need it.

I love this. I think, after writing this piece and sitting/thinking on it for a week or so (thinking! effort!), and reading more articles about this, I fully accept my apathy and resistance. I feel grateful for my appreciation of my own thinking-process, and my commitment to preserving that. I don’t want to feel guilty or ashamed or insecure that I have some serious AI-apathy.

I started this piece with more resentment than I feel now, a week and a half later. I started it thinking “I don’t want to have to learn AI! Why can’t we just continue to use our brains?!” Now, I don’t know that I feel so black and white about it (and Claude agrees, lol). I was really inspired by this OpEd in the NYTimes from a writing professor who has been experimenting with the different AI tools. She knows it’s here and she wants to understand the ways her students are using the tools. She writes about her experience with the internet for the first time:

When I was an undergraduate at Yale in the 1990s, the internet went from niche to mainstream. My Shakespeare seminar leader, a young assistant professor, believed her job was to teach us not just about “The Tempest” but also about how to research and write. One week we spent class in the library, learning to use Netscape. She told us to look up something we were curious about. It was my first time truly going online, aside from checking email via Pine. I searched “Sylvia Plath” — I wanted to be a poet — and found an audio recording of her reading “Daddy.” Listening to it was transformative. That professor’s curiosity galvanized my own. I began to see the internet as a place to read, research and, eventually, write for.

She then goes on to say that of course, integrating AI into the classroom poses significantly more challenges than the internet did. Professors’ skepticism is necessary. And yet what I also got from her piece was, to what extent can those tools aid in my curiosity, as opposed to dampening it? I don’t want to be so obstinate that I miss something that changes everything, not even in reference to money or opportunities, but for the innovation to humanity.

To go back to the question I posed Claude about this, I can’t deny that the AI tool reassured me with its final line:

Your instinct to not rush into something you're ambivalent about has wisdom to it. The technology will still be there when and if you decide you need it.

Question: what is your relationship to AI today?

I’d love to hear from you about if/how you use AI in your own life?

If you don’t use it, how do you feel about its omnipresence?

If you do use it, is it to do the tasks that feel laborious or hard for you?

Is it to “save time?”

Is it for creative brainstorming? Any/all of the above?

And also.. how do you think about its brain in relation to your own?

Additional media about this topic

Podcasts

From the podcast Tech Won’t Save Us: We all suffer from OpenAI’s pursuit of scale w/Karen Hao (I feel like maybe we should read her book for our book club?)

Ezra Klein’s recent podcast episode: How the Attention Economy is Devouring Gen Z—and the Rest of Us

Reading

A SUPER thorough and interesting Medium article that ranks AI bots by levels of evil: I love Generative AI and I hate the companies building it

From TIME magazine: ChatGPT May Be Eroding Critical Thinking Skills, According to a New MIT Study

NYTimes Op-Ed: I Teach Creative Writing. This is what AI is Doing to Students

What are other things you read/listened to about AI recently that got your brain whirling?

I mean, there are significant systemic consequences for using AI but I know most of the people who use it are just trying to make their lives easier and who tf am I to judge…

I do think there is a really important conversation around neurotypical and neurodivergent brains here! Like even when work is hard for me, as a neurotypical person, I am not pained or tortured in the way that neurodivergent people might be with this kind of work. And I really respect that.

I used to be pretty apathetic toward AI until this month where I started using it for my job search, mostly because I hate the job search process so so much that when I realized I could use AI for my resume, LinkedIn, finding jobs, I was hooked. BUT (and this is a big but) this is formed from my feelings of how the job search is so inauthentic and curated anyway. “Sell yourself, format your resume with random metric to get through the ATS, make your LinkedIn reach higher” that I was just like okay AI do what you can for me, because being this curated is so incredibly exhausting just to get a recruiter to see me.

BUT in areas where it is more downhill flow for me to be myself, in my writing, in interviews themselves, I wouldn’t really use AI and I’m fairly apathetic towards how it could help me there. That’s partly may be why you’re apathetic towards AI, is because you have put yourself in a space to be yourself / work that doesn’t require you to be a specific character. Hmm maybe that’s what it is? It’s that there may be more & more spaces that ask for us to act in certain ways (Instagram, LinkedIn, etc) and then it’s coupled with AI that easily gets you there. Not sure but that’s interesting,

But honestly our own thinking and putting ourselves in places that stretch us is going to be so important, and your affinity to that is a gift for sure!

I am one of those people who doesn't use generative ai in my person life because I am pretty much 100% against it, I find it to be deeply unethical for plagiarism and environmental reasons and so far do not feel like I am missing out on some amazing new tool. I just generally do not feel like it will make any part of my life easier that I want made easier. I am so tired of optimization and convenience being sold as the solution and feel like generative ai fits into that talking point.

At work I use an AI voiceover creation program and I feel icky about using it but I don't have an alternative solution and it's approved all the way to the top of the organization so I don't feel like I have any ability to sway that decision.

I definitely had to check my immediate outrage when I started reading this, and always appreciate your thoughtfulness and willingness to think out loud!